Summary

Robots need to solve open-ended real world problems. For that, they need to plan in open world domains for underspecfied problems, which classical planners cannot solve. We discuss methods to leverage LLMs alongside planners, to admit efficient and successful plans.

By Ishika Singh

Everyday household tasks require both common sense understanding of the world and situated knowledge about the current environment. To create a task plan for “Make dinner” an agent needs common sense: object affordances, such as the stove can be used to make food; logical sequences of actions, such as the oven must be preheated before puting the food in; and task relevance of objects and actions, such as food and heating are actions related to “making dinner” in the first place. This reasoning also needs to be situated in the agent’s current working environment, i.e., it should know whether the fridge contains salmon or tofu.

While this problem sounds quite complicated, numerous solutions have been proposed for this problem. It ranges from symbolic or classical planning that requires extensive domain definition of object affordances, action-sequencing logic, etc., to using Large Language Models that provide approximate plans based on common sense without much definition. In the following sections we will look at the proper formulation of this seemingly open-ended problem, followed by several approaches for solving it.

Planning problem / Background / Problem Formulation

This planing problem is often formulated as,

where: is a finite and discrete set of environment states;

is the initial state;

is the set of goal states that satisfy a goal condition

; and

are sets of symbolic actions, objects, and environment predicates, respectively. A state is defined as a list of environment predicates, applied on objects and agents, that hold true.

is the underlying transition function that defines how the state conditions change when an action

is executed in the environment. A solution to

is a symbolic plan

given as a sequence of actions

, such that preconditions of each

holds in the state

, where

and

.

Classical Planning

Classical or automated task planning algorithms have been widely applied in autonomous spacecrafts, military logistics, manufacturing, games, and robotics. The automated STRIPS planner, for example, operated the Shakey robot in 1970. Basic classical planning algorithms work with finite, deterministic, and full state information, admitting guaranteed plan generation when a path from the initial to goal states is possible. Planning domain description language (PDDL) and answer set programming (ASP) are popular specification formats for planning domains. Let’s learn more about PDDL and how classical planning can be applied in this planning language.

Planning Domain Definition Language (PDDL) serves as a standardized encoding language to represent planning problems. Let’s consider deterministic, fully-observable planning problems for the sake simplicity. The planning problem in PDDL is represented by two files: domain and problem. The domain file defines the environment in terms of the object types and predicates, followed by action and their parameters, required preconditions, and postcondition effects, as shown in the left side figure above. Some state conditions relate to the agent (atLocation ?a ?l), and others to the environment (objectAtLocation ?o ?l). The problem file defines an instance of the domain with a list of objects, initial state and goal conditions, as shown on the right side of the figure. PDDL planner takes in a domain and problem file and searches for action sequences leading from the initial state to a goal state, heuristically returning the shortest such plan found, if any, shown in the bottom right part of the figure.

LLMs for Planning

Basic LLM for Planning

A Large Language Model (LLM) is a neural network with many parameters—potentially hundreds of billions —trained on unsupervised learning objectives such as next-token prediction or masked-language modelling. An autoregressive LLM is trained with a maximum likelihood loss to model the probability of a sequence of tokens conditioned on an input sequence

, i.e.

, where

are model parameters. The trained LLM is then used for prediction

, where

is the set of all text sequences.

LLMs are trained on large text corpora, and exhibit multi-task generalization when provided with a relevant prompt input . Prompting LLMs to generate text useful for robot task planning has recently emerged as a popular approach for planning. However, prompt design is challenging given the lack of paired natural language instruction text with executable plans or robot action sequences. Devising a prompt for task plan prediction can be broken down into a prompting function and an answer search strategy. A prompting function, fprompt(), transforms the input state observation

into a textual prompt. Answer search is the generation step, in which the LLM outputs from the entire LLM vocabulary or scores a predefined set of options. SayCan uses natural language prompting with LLMs to generate a set of feasible planning steps, re-scoring matched admissible actions using a learned value function. This is challenging to do in environments with combinatorial action spaces. It also assumes a 1:1 mapping between LLM outputs can be mapped to exactly one action.

Our paper proposes an LLM prompting scheme, ProgPrompt that solves the planning problem via leveraging both LLM’s commensense and program-generation capabilities. We leverage features of programming languages in our prompts. We define a prompt that includes import statements to model robot capabilities, natural language comments to elicit common sense reasoning, and assertions to track execution state. Our answer search is performed by allowing the LLM to generate an entire, executable plan program directly. ProgPrompt utilizes programming language structures, leveraging the fact that LLMs are trained on vast web corpora that includes many programming tutorials and code documentation. ProgPrompt provides an LLM a Pythonic program header that imports available actions and their expected parameters, shows a list of environment objects, and then defines functions like “make dinner” whose bodies are sequences of actions operating on objects. We incorporate situated state feedback from the environment by asserting preconditions of our plan, such as being close to the dishwasher before attempting to open it, and responding to failed assertions with recovery actions. What’s more, we show that including natural language comments in ProgPrompt programs to explain the goal of the upcoming action improves task success of generated plan programs.

Following ProgPrompt, ChatGPT for Robotics further explores API-based planning with ChatGPT in domains such as aerial robotics, manipulation and visual navigation. They discuss the design principles for constructing interaction APIs, for action and perception, and prompts that can be used to generate code for robotic applications. Clarify proposes iterative error correction via a syntax verifier that repeatedly prompts the LLM with previous query appended with a list of errors. VisProg extends the LLM code generation and API-based perceptual interaction approach for a variety of vision-langauge tasks.

Scaling to multiple problem domains

LLMs cannot reliably solve long-horizon robot planning problems. Moreover, in ProgPrompt we reported success rates for the plans generated by the LLM. By contrast, classical planners, once a problem is given in a formatted way, can use efficient search algorithms to quickly identify correct, or even optimal, plans. However, LLMs can map an arbitrary natural lagauge problem to situated solution, that classical planners cannot do. LLM+P proposes the best of both worlds, a framework that incorporates the strengths of classical planners into LLMs. LLM+P does so by first converting the language description into a file written in the planning domain definition language (PDDL), then leveraging classical planners to quickly find a solution.

problem into a PDDL goal condition.

Scaling to multiple agents and parallelize plan execution

Symbolic planning problems specified in PDDL tend to explore single agent plans, and changing these domain specifications to enable planning with multiple agents requires a human expert. Multi-agent planning also introduces an exponential growth in the search space over possible plans, since multiple agents can take an action at each timestep. Our work, TwoStep explores whether the common sense reasoning abilities in LLMs can take advantage of the lexical semantics encoded in the variable, action, and other ontological names of an expert-written planning domain to predict subgoals for individual agents that, when executed together, will achieve a given global goal.

TwoStep decomposes multi-agent planning problem into two single agent planning problems by leveraging LLM’s commonsense and reasoning contextualized with humans operating together in diverse scenarios. In particular, we consider a two-agent planning problem with a helper and main agent. For a given problem , the

helper plans to reach a subgoal state

from

‘s initial state

, using a planner

. This subgoal state (

) then serves as the initial state (

) for the

main agent plan to reach the

‘s specified goal,

, where

refers to Plan Execution. The

helper conceives using 2 modules: Subgoal Generation to produce a possible helper subgoal in English – English Subgoal, and Subgoal Translation to translate the English Subgoal into PDDL format goal – PDDL Subgoal. We show that the common sense reasoning abilities of LLMs can be leveraged in this context to predict

helper agent subgoals whose plan actions will be executable in parallel to those of the

main agent , where latter takes for granted that the former will achieve the subgoal eventually, leading to shorter execution lengths overall.

helper and a main agent. The helper tries to extract and complete a partially independent subgoal for the task of “boiling potato slices” that reduces the steps for main agent, while main agent completes the remaining task. The plan generated for both agents are then executed to find the execution length.Scaling to multiple domains and environments

Classical planning requires extensive domain knowledge to produce a sequence of actions that achieve a specified goal. The domain contains expert-annotated action semantics that govern the dynamics of the environment. For example, traditional symbolic methods, like Planning Domain Description Language (PDDL) solvers, have action semantics annotated in a domain file. Classical planning algorithms systematically explore the state space based on actions that can be executed in any given state as per the domain definition. Therefore, the resultant plan is guaranteed to succeed, if the specified goal is achievable. However, it is tedious to exhaustively define the domain to enable classical planning in an environment, and requires a human expert for every new environment.

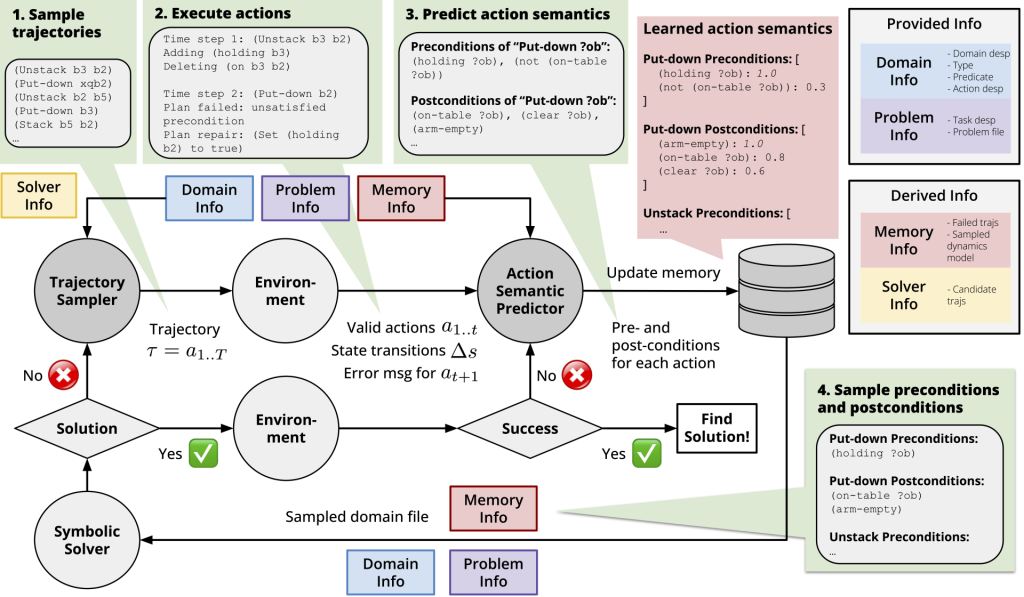

PSALM introduces a novel domain induction task setting in PDDL, in which an agent must automatically infer the action semantics of an environment without manual annotation or error correction. In this setting, the domain information, such as object properties and action functions headers, are given to an agent. The agent is then asked to infer the action semantics through interacting with the environment and learning from resulting feedback. Our proposed setting is motivated by the longer-term goal of building real-world robots that can explore a new environment (e.g., a kitchen) and learn to perform new tasks in the environment (e.g., cleaning) without human specification of the environment.

As shown in the figure, given the task we want to solve, we first use an LLM as the trajectory sampler to sample trajectories, and execute the trajectories in the environment to get feedbacks. We then use the LLM again together with a rule-based parser, as the action semantics predictor to predict action semantics, i.e., preconditions and postconditions, for each action, based on this environment feedback. We update the memory of the learned action semantics and finally sample the current belief of the action semantics to the symbolic solver to check the success. If the symbolic solver finds a solution, we execute the plan in the environment to check if the plan succeeds. If the plan reaches the goal in the environment, we finish the loop; otherwise, we will pass the result of the failed plan to the LLM to predict the action semantics again. If the symbolic solver does not find a solution, we will provide some partial candidate trajectories from the symbolic solver to the trajectory sampler, to seed the sampled trajectory.

This method allows automated LLM guided extraction of the underlying action semantics or domain by generating approximate plans, and provides a way to deploy and use classical planning in novel scenerios, warm started via LLMs, giving us the best of both worlds!

Future Work: These tasks are related to “make dinner”. The presented works do not show handling of user preferences for such tasks. Future work could include planning with user preferences into account, that LLM-based planning systems are capable of handling. Planning with more than two agents and recovering PDDL domains for unstructured environments while incorporating visual feedback are also open problems. We have only begun scratching the surface of LLMs + classical planning systems for robotics and are excited about exploring future possibilities!

This post is based on the following papers:

- ProgPrompt: Generating Situated Robot Task Plans using Large Language Models

Ishika Singh, Valts Blukis, Arsalan Mousavian, Ankit Goyal, Danfei Xu, Jonathan Tremblay, Dieter Fox, Jesse Thomason, Animesh Garg; ICRA 2023, Autonomous Robots 2023 - TwoStep: Multi-agent Task Planning using Classical Planners and Large Language Models

Ishika Singh, David Traum, Jesse Thomason; arxiv 2024 - Language Models can Infer Action Semantics for Classical Planners from Environment Feedback

Wang Zhu, Ishika Singh, Robin Jia, Jesse Thomason; arxiv 2024